Powerful Reasons Why GPU Workloads Are Better Than Traditional Hosting?

Enterprises today are navigating an inflection point in compute strategy. Traditional hosting models long optimized for websites, ERP systems, and databases are now being reevaluated in light of growing demands for high-performance computing. As machine learning, computer vision, and data-intensive AI pipelines become mainstream, there’s a clear shift toward GPU-backed infrastructure.

This isn’t a conversation about abandoning one model for another. It’s about choosing the right environment for the right workload. And for CTOs, CXOs, and technology architects, understanding the trade-offs between traditional compute hosting and GPU as a Service is now essential to future-proofing enterprise architecture.

The Nature of Enterprise Compute Workloads Is Evolving

Traditional enterprise applications CRM systems, transaction processing, web portals — typically rely on CPU-bound processing. These workloads benefit from multiple threads and high clock speeds but don’t always need parallel computation. This is where traditional VPS or dedicated hosting has served well.

But modern enterprise compute workloads are changing. AI inference, deep learning model training, 3D rendering, data simulation, and video processing are now key components of digital transformation initiatives. These tasks demand parallelism, memory bandwidth, and computational depth that standard hosting architectures cannot deliver efficiently.

The global GPU cloud market was valued at USD 3.17 billion in 2024 and is projected to expand to USD 47.24 billion by 2033 (CAGR ~35%).(Source)

What Makes GPU Hosting Different?

A GPU cloud is built around infrastructure optimized for graphical processing units (GPUs), which are designed for parallel data processing. This makes them particularly suitable for workloads that need simultaneous computation across thousands of cores — something CPUs aren’t built for.

In a GPU as a Service model, organizations don’t buy or manage GPU servers outright. Instead, they tap into elastic GPU capacity from a service provider, scaling up or down based on workload requirements.

GPU hosting is especially suited for:

- Machine Learning (ML) model training

- Natural Language Processing (NLP)

- AI-driven analytics

- High-resolution rendering

- Real-time fraud detection engines

When hosted via a GPU cloud, these workloads run with significantly improved efficiency and reduced processing times compared to CPU-centric hosting setups.

Traditional Hosting

While GPUs dominate headlines, CPU hosting is far from obsolete. Traditional hosting continues to be ideal for:

- Web hosting and CMS platforms

- Email and collaboration tools

- Lightweight databases and file servers

- Small-scale virtual machine environments

- Static or low-traffic applications

For predictable workloads that don’t require large-scale parallel processing, traditional setups offer cost efficiency and architectural simplicity.

But pairing traditional hosting with high-performance GPUs via cloud integrations creates a balanced environment one that supports both legacy applications and new-age workloads.

The Growing Demand for AI Hosting in India

Across sectors from banking to healthcare, from manufacturing to edtech organizations are investing in artificial intelligence. With that investment comes the need for reliable AI hosting in India that respects data localization laws, ensures compute availability, and meets uptime expectations.

Choosing GPU as a Service within the Indian jurisdiction allows enterprises to:

- Train and deploy AI models without capital expenditure

- Stay aligned with Indian data privacy regulations

- Access enterprise-grade GPUs without managing the hardware

- Scale compute power on demand, reducing underutilization risks

As AI adoption becomes more embedded in business logic, India’s need for GPU infrastructure is set to increase not hypothetically, but based on current operational trends across regulated industries.

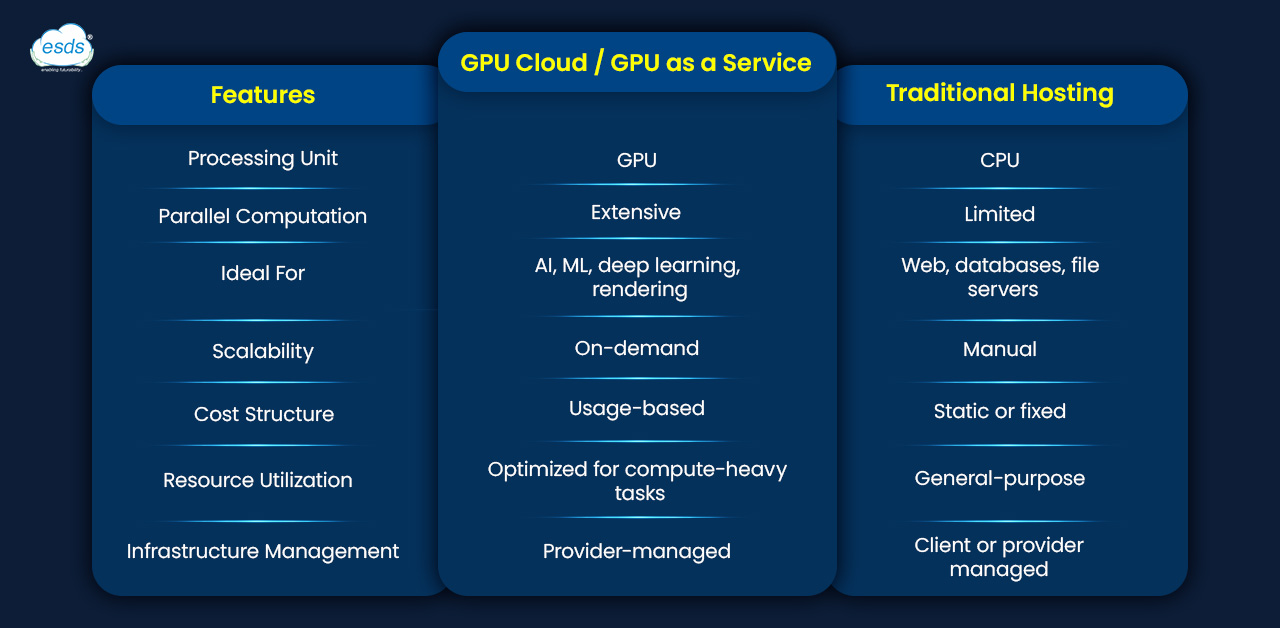

GPU Cloud vs Traditional Hosting

This comparison isn’t about which is better it’s about workload compatibility. For enterprises juggling diverse applications, hybrid infrastructure makes practical sense.

Security, Isolation & Compliance

When it comes to hosting enterprise-grade workloads, especially in AI and data-sensitive sectors, isolation and compliance are non-negotiable. A GPU as a Service model hosted in a compliant GPU cloud environment typically provides:

- Role-based access controls (RBAC)

- Workload-level segmentation

- Data encryption in transit and at rest

- Audit trails and monitoring dashboards

This becomes even more relevant for AI hosting in India, where compliance with regulatory frameworks such as RBI guidelines, IT Act amendments, and sector-specific data policies is mandatory.

Cost Efficiency

While GPU servers are expensive to procure, GPU as a Service models offer a pay-per-use structure that reduces capex and improves resource efficiency. But the cost advantage doesn’t stop there.

True cost-efficiency comes from:

- Avoiding idle GPU time (scale down when not in use)

- Using right-sized instances for specific training workloads

- Faster model completion = shorter time-to-insight

- Lower personnel cost for infrastructure management

Comparing costs solely based on hourly rates between CPU and GPU hosting doesn’t reflect the full picture. It’s about output per unit of time and agility in deployment.

Strategic Planning for Enterprise Compute Workloads

For CTOs and tech leaders, the real value lies in planning for hybrid usage. The idea isn’t to move everything to GPU but to route specific enterprise compute workloads through GPU cloud environments when the need arises.

This includes:

- Running AI training on GPU while hosting model APIs on traditional hosting

- Storing datasets on object storage while processing on GPU VMs

- Pairing BI dashboards with GPU-backed analytics engines

The key is orchestration allocating the right resource to the right task at the right time.

At ESDS, our GPU as a Service offering is designed for Indian enterprises seeking high-performance computing without infrastructure management overhead. Hosted in our compliant data centers, the GPU cloud platform supports:

- AI/ML workloads across sectors

- Scalable GPU capacity with real-time provisioning

- Secure, role-based access

- Integration with traditional hosting for hybrid deployments

We ensure your AI hosting in India stays local, compliant, and efficient supporting your journey from data to insight, from prototype to production.

There’s no one-size-fits-all solution when it comes to compute strategy. The real advantage lies in understanding the nature of your enterprise compute workloads, identifying performance bottlenecks, and deploying infrastructure aligned to those needs. With GPU cloud models gaining traction and GPU as a Service becoming more accessible, tech leaders in India have the tools to execute AI and data-intensive strategies without overinvesting in infrastructure.

Traditional hosting remains relevant but the workloads shaping the future will require parallelism, scalability, and specialized acceleration. Choosing the right blend, at the right time, is where enterprise advantage is created.

- 7 Steps to Build a Strong Data Sovereignty Framework - November 3, 2025

- 7 Proven GPUaaS Steps for PSU Success and Secure Resilience - September 26, 2025

- How Tier III DCs Deliver Sovereign Data Security You Can Trust - September 5, 2025