How To Choose a Cloud GPU Provider In 2026

The ability of GPUs (Graphics Processing Units) to analyze large volumes of data rapidly has led to an increase in their use in machine learning (ML) and artificial intelligence (AI) applications. GPUs are perfect for compute-intensive applications because they are excellent at parallel processing, in contrast to CPUs, which handle jobs sequentially.

As processing demands have increased, GPU technology has advanced due to the need for more powerful resources, particularly for applications that require high-definition visuals and sophisticated procedures like deep learning and graphics rendering. The efficiency required for dense, high-speed tasks is provided by GPUs, while CPUs serve as the basis for speedier computation.

On-premise GPUs were used by many firms in the past, however maintaining this hardware internally can be expensive and difficult. Cloud-based GPUs have emerged as a compelling substitute due to the quick development of GPU technology, providing access to the newest hardware without the hassles of upkeep or expensive initial expenditures.

In this post, we will look at the benefits and use cases of cloud-based GPUs, as well as how to choose the best cloud GPU provider.

Key takeaways: –

- Think about security/compliance needs, multi-cloud compatibility with Docker/Kubernetes, data transmission costs that go beyond hourly rates, and the provider’s ability to scale from prototyping to production workloads when selecting an inexpensive cloud GPU service.

- Use model compression/quantization for less expensive instances, employ smart batching for inference, choose the appropriately sized VRAM to prevent costly memory swapping, and keep an eye on GPU utilization above 70% to optimize ROI per billable hour in order to reduce GPU expenses.

What are Cloud GPUs?

GPUs are microprocessors that can do specific activities like speeding up graphics creation and simultaneous computations by utilizing their increased memory bandwidth and parallel processing capabilities. GPUs are superior at handling several calculations at once, in contrast to CPUs that are designed for sequential processing. They are now necessary for the dense processing needed for machine learning, 3D imaging, video editing, and gaming. It’s no secret that GPUs perform heavy computations far more quickly and efficiently than CPUs, which are extremely slow.

Because the training step requires a lot of resources and the hundreds or thousands of cores in a GPU make it much easier to conduct these processes in parallel, GPUs are significantly quicker than CPUs for deep learning operations. Because of the many convolutional and dense processes involved, such methods necessitate substantial data-point processing. For large-scale input data and deep networks, which define deep learning projects, these entail several matrix operations between tensors, weights, and layers.

GPUs are far more effective than CPUs at running deep learning processes because of their many cores, which allow them to execute these many tensor operations more quickly, and their higher memory bandwidth, which allows them to store more data.

Why Should Consider GPU cloud?

While some users choose to have GPUs on-site, cloud GPUs are becoming more and more popular. Custom installs, management, upkeep, and future updates are often costly and time-consuming for an on-premise GPU. In contrast, cloud platforms offer GPU instances that only require consumers to access the service at a reasonable cost, without requiring any of those technological processes. These platforms are in charge of overseeing the entire GPU infrastructure and offer all the services needed to use GPUs for computing. Additionally, customers are not responsible for costly upgrades, and they can move between machine types as new models become available at no additional expense.

By removing the technical procedures needed to self-manage on-premise GPUs, users can concentrate on their area of expertise, streamlining corporate operations and increasing output.

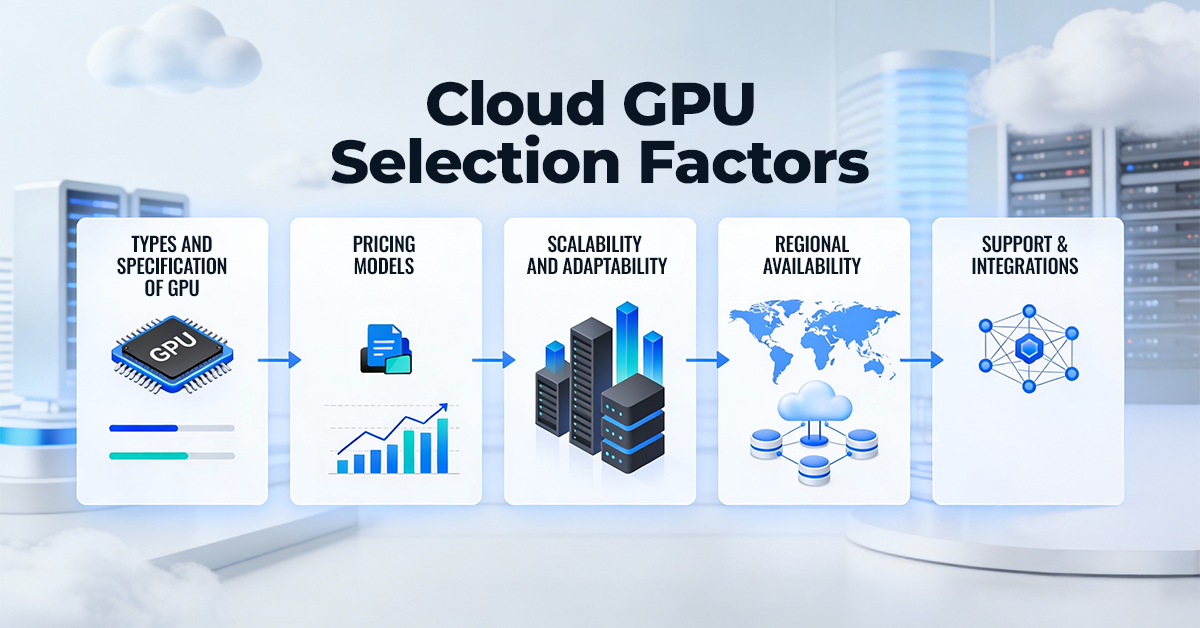

Factors to Consider when choosing a GPU Cloud provider

Based on your unique requirements, you can choose the best cloud GPU provider. The following are important variables that needs to be consider:

- Types and specifications of GPU instances: Different GPU models with different performance attributes are available from providers. Examine alternatives and evaluate their clock speed, memory, bandwidth, and core processing power.

- Pricing structures: Pay-as-you-go, per-second billing, and subsidized spot instances are just a few of the flexible pricing options that the majority of cloud providers provide. To prevent overspending on unused resources and effective cloud cost optimization, adjust your budget appropriately.

- Scalability and adaptability: Verify that your supplier can meet your demands both now and in the future. You may save money and preserve performance by adjusting resources according to demand using auto-scaling features.

- Regional availability: Take into account the locations of the provider’s data centers. For real-time applications, including those in sectors like banking and healthcare, geographically close servers boost performance and lower network latency.

- Support and integration: Seek out suppliers who provide robust customer support along with thorough integration with other cloud services. Smaller, more niche companies are frequently better at offering specialized, individualized services for particular sectors.

Pros of using GPU Cloud provider

For good reason, cloud GPUs have become the preferred option as they enable you to experiment without incurring significant upfront expenditures, scale up or down as your needs change, and spin up powerful hardware in a matter of minutes. However, they have trade-offs that you should be aware of before using them, just like any other tool.

| Aspect | Pros |

| Cost Efficiency | Eliminates the need for heavy upfront hardware investments, making GPU compute accessible to startups and SMEs. |

| Scalability | Enables rapid scaling up or down based on workload demands without hardware limitations. |

| Performance Optimization | Allows deployment of workloads across multiple geographic regions for minimal latency and improved performance. |

| Disaster Recovery & Availability | Provides options for regional redundancy, high availability, and disaster recovery capabilities. |

| Maintenance & Upgrades | Removes the burden of hardware maintenance, upgrades, and infrastructure management. |

Conclusion

Scalable, high-performance computing has become essential as businesses move faster toward AI-driven transformation. By bridging the gap between innovation and infrastructure, cloud-based GPUs enable businesses to scale easily, save money on capital projects, and concentrate on creating smart solutions rather than maintaining hardware.

This next wave of innovation is intended to be powered by the ESDS GPU-as-a-Service platform. Secure Tier-III data centers, adherence to industry standards (GDPR, HIPAA, etc.), and adaptable pricing structures that maximize efficiency and performance are all advantageous to businesses. Whether it’s implementing real-time inference systems, simulating intricate datasets, or training massive language models.

Additionally, you may refer to the guide on 10 Ways to Reduce GPU Cloud Spend and Boost Performance.

- How To Choose a Cloud GPU Provider In 2026 - January 30, 2026

- 15 Critical DBaaS Migration Questions Every CTO Needs to Ask for a Successful Migration - January 27, 2026

- Top 10 Cloud Infrastructure Trends for CTOs in 2026 - January 23, 2026